Google presented a new language model that is capable of solving mathematical problems, explaining jokes and even programming. This is PalM (P athways Language Model) and stands out for having a percentage of learning efficiency that places it above other language models created to date.

The PalM system was developed with the Pathways model, which allowed to efficiently train a single model with multiple tensor processing units (TPUs) Pods, as mentioned in a statement published on the official blog.

It is based on learning “few shots”, which reduces the number of examples needed in training with specific tasks to adapt it to a single application.

For this, a database with 780 billion tokens has been used, which combines “a multilingual dataset”, which includes web documents, books, Wikipedia, conversations, and GitHub code. Also, a vocabulary that “preserves all white spaces”, something that the company points out as especially important for programming, and the division of Unicode characters that are not found in the vocabulary into bytes.

This new AI houses 540 billion parameters, a figure that exceeds 175 billion of OpenAI's GPT-3, the language model that Google cites as pioneer in showing that these can be used for learning with impressive results. It is worth recalling, just to cite one example, the column published in The Guardian, which was written by this learning model, which is also capable of program or design.

“The mission of this opinion column is perfectly clear. I must convince as many humans as possible not to fear me. Stephen Hawking has warned that artificial intelligence could 'mean the end of the human race'. I'm here to convince you not to worry. Artificial intelligence is not going to destroy humans. Believe me.” That's one of the excerpts from the 500-word article that the system produced.

Google's new language model combines 6,144 TPU v4 chips into Pathways, “the largest TPU configuration” used in history, as highlighted by the company. PalM also achieves 57.8% training efficiency in the use of hardware flops, “the highest achieved so far for language models at this scale”, as they mention in the blog.

This is possible thanks to the combination of “the strategy of parallelism and a reformulation of the transforming block” that allows the attention and advancement layers to be computed in parallel, thus accelerating the optimizations of the TPU compiler.

“PalM has demonstrated innovative capabilities in numerous and very difficult tasks,” says the technology company, which has given several examples ranging from language understanding and generation to reasoning and programming-related tasks.

One of the tests Google gives as an example is to ask PalM to guess a movie based on four emojis: a robot, an insect, a plant and planet Earth. Out of all the options (L.A. Confidential, Wall-E, León: the pro, BIG and Rush), AI chooses the right one: Wall-E.

In another, you are asked to choose from a list of words two that are associated with the term “stumble” and you are also right to select “fall” and “stumble”.

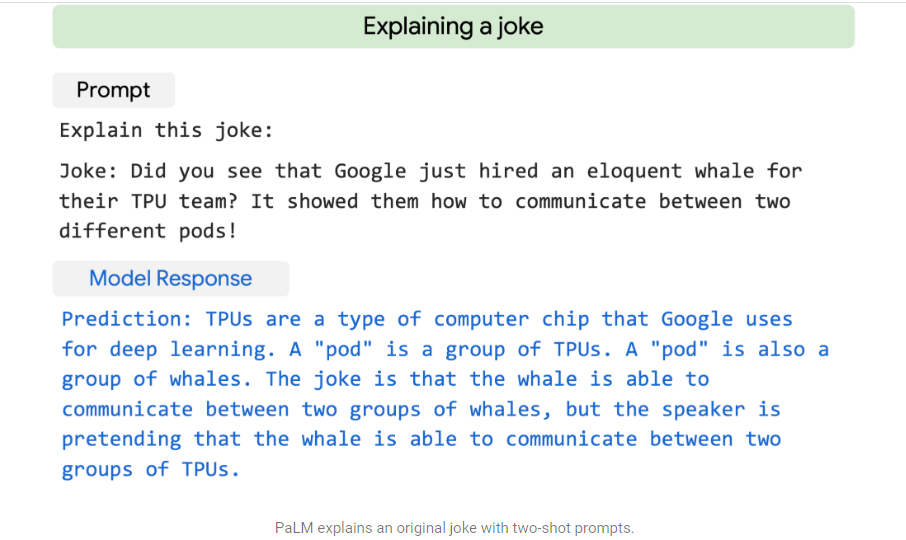

AI is also capable of solving simple mathematical problems and even explaining a joke by contextualizing and explaining the elements that appear in it to make sense of it.

Finally, Google points out that PalM is capable of programming by translating code from one language to another, as well as writing code based on a natural description of the language, and clarifies that it is capable of fixing compilation errors.

(With information from Europa Press)

KEEP READING:

Últimas Noticias

Debanhi Escobar: they secured the motel where she was found lifeless in a cistern

Members of the Specialized Prosecutor's Office in Nuevo León secured the Nueva Castilla Motel as part of the investigations into the case

The oldest person in the world died at the age of 119

Kane Tanaka lived in Japan. She was born six months earlier than George Orwell, the same year that the Wright brothers first flew, and Marie Curie became the first woman to win a Nobel Prize

Macabre find in CDMX: they left a body bagged and tied in a taxi

The body was left in the back seats of the car. It was covered with black bags and tied with industrial tape

The eagles of America will face Manchester City in a duel of legends. Here are the details

The top Mexican football champion will play a match with Pep Guardiola's squad in the Lone Star Cup

Why is it good to bring dogs out to know the world when they are puppies

A so-called protection against the spread of diseases threatens the integral development of dogs